What is the problem

We are AI net-positive. We believe that AI is and will be a powerful tool to advance our lives, our economy, and our opportunities to thrive. But only if we are careful to ensure that the AI we use does not perpetuate and mass produce historical and new forms of harm.

Why now?

We are at a critical juncture because AI is becoming an increasingly important part of our daily lives — and decades of progress made and lives lost to promote equal opportunity can essentially be unwritten in lines of code.

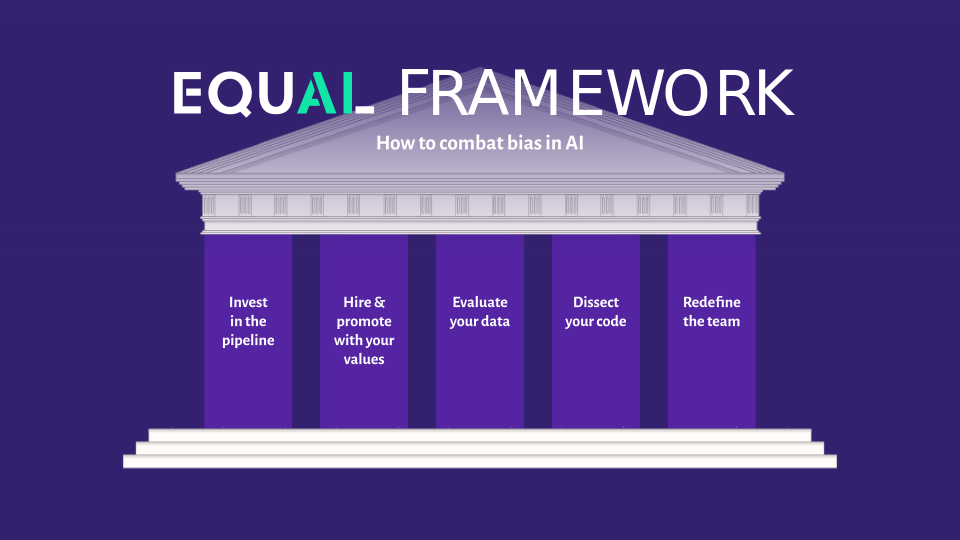

The EqualAI Framework

Here are steps to take if you are committed to reducing harm in your AI through responsible AI governance.

Invest in the pipeline

Research suggests that homogeneous teams – like those that comprise many tech giants’ engineering corps – are more likely to generate algorithms with harms than diverse teams. We have also seen that lack of diversity in tech creation could rise to a life or death issue given the ultimate uses for many of these technologies, such as self-driving cars or determination of who can access ventilators, critical health care services during a crisis or determining an individual’s fate in the criminal justice system.

Many reputable organizations are ensuring that our next generation of coders and tech executives represent a broader cross-section of our population. If you are serious about making better AI and ensuring more diversity in our tech creation and management, support these forward-thinking organizations (e.g., AI4All, Girls Who Code, Code.org, Black Girls Code, Kode with Klossy, etc.)

Hire and promote with your values

To create and sustain a diverse workplace, and produce better AI, you must train your employees to recognize and address harms in hiring, evaluation and promotion decisions. Your AI programs must be in sync or they could impede your efforts. AI used for hiring, evaluations and terminations could be infused with harms and requires constant checks, as it will learn new patterns constantly and may result in inequitable employment decisions.

Ensure you have a broad cross-section of diversity in each candidate panel.

Ensure humans remain in decision-making processes and constantly check for harms.

Evaluate your data

Find out what you can about the datasets on which your AI was built and trained. Try to identify the gaps so that they can be rectified, addressed or at a minimum, clarified for users. See the EqualAI Checklist© for a starting place to evaluate your data sets and consider FTC guidance, including an evaluation to determine:

How representative is the data set?

Does the data model account for harms?

How accurate are the predictions?

Test your AI

As with AI used in employment decisions, your AI programs and particularly customer-facing systems should be checked for harm on a routine basis. AI learns new patterns as it is fed new data and can adopt new harms. See the FTC blog and EqualAI Checklist© for additional guidance and enlist experts, such as ORCAA, to help you test your AI.

Redefine the team

AI products should be tested prior to release for and by those under-represented in its creation or in the underlying datasets. The diversity of your current team and represented in your datasets are not your ultimate fate. Once you have completed steps 3 and 4, you can determine potential end-users or those who could be impacted downstream that were insufficiently represented on your team and datasets. Ensuring you have done sufficient testing before your product goes to market is critical.

Mission statement

Video produced by NP Agency

EqualAI is a nonprofit organization created to help avoid harms and build trust in AI systems by defining and operationalizing responsible AI governance.

Together we can create #EqualAI

As AI goes mainstream, help us remove harms and create it equally.